ChatGPT Apps SDK - What We've Learned So Far

ChatGPT released their new Apps SDK a few weeks ago. Though in its infancy, it will likely evolve into a thriving app ecosystem. Based on our initial experimentation, here’s what we’ve learned and can share.

Introduction

In October 2025, OpenAI announced the launch of the ChatGPT Apps SDK. Essentially, they have developed and provided an SDK that helps with communication between their LLM and an MCP Server you configure, reducing technical debt and allowing brands to embed their own data via custom interactive widgets or through prompt dialogue directly into ChatGPT conversations.

What is MCP?

Model Context Protocol (MCP) is an open standard that defines how a LLM can communicate with external systems which include but are not limited to APIs, databases, or applications in a secure way maintaining structural integrity of data.

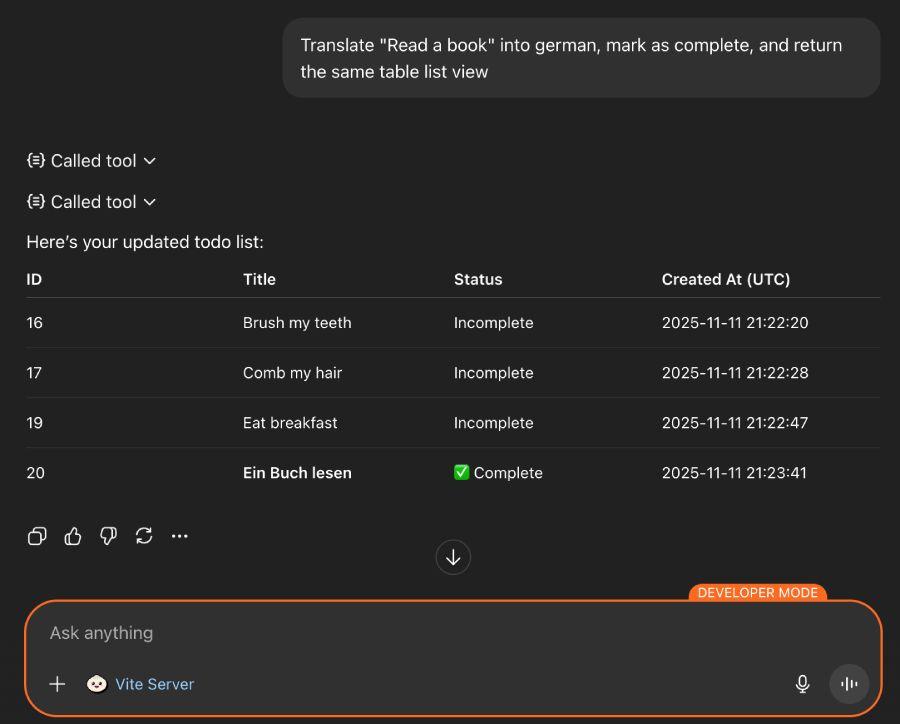

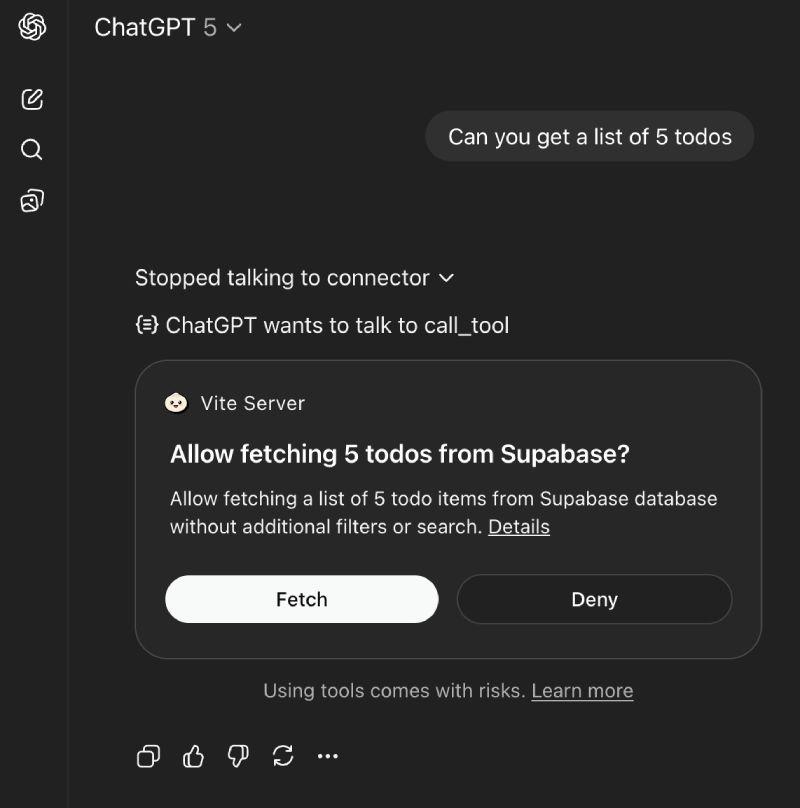

An MCP Server is a backend service that implements the protocol providing context into requests that are made to the server via LLM. The server itself is designed as a bridge that can server UI to be populated with requests, handle external datasets by requesting them, manipulating it, and even being granted CRUD capabilities to alter external data. Developers build their app on top of an MCP server, which allows ChatGPT to send requests (like “fetch data,” “update a record,” or “perform a calculation”) to the app through standardized protocols.

Translation: instead of ChatGPT just generating text, it can now interact directly with an app’s data and tools, fetching real-time information or performing actions.

How is this different from a web app?

From our perspective, this isn’t an “either/or” option. Rather than replacing existing apps, think of this as an option that can be paired to enhance capabilities and productivity.

That being said, an MCP Server is hosted separately and has to be accessible remotely for ChatGPT to access it. For local development a remote tunnel (such as Ngrok) is required to host the application. Naming conventions, configuration, and context into tooling has to be tightly defined as the LLM will be expecting prompts to trigger the correct tool. Returned data or templates have access to the entire LLM that it was loaded into so conversations based around that context can be used to enhance and alter data.

So, in addition to an existing mobile app or website, brands can:

Expose their functionality directly in ChatGPT.

Let users interact with their services using natural language.

Maintain control of their data and APIs through their MCP server.

What data is shared with an app?

The data shared within an app is based on the context of your current dialogue with the Chat App and what tools have been configured within the MCP Server.

The server can connect to any 3rd-party application by acting as a bridge to make API calls, database queries, or even just to run logic on data that has been defined and provided by the LLM. Whenever accessing an external service (such as retrieving data or deleting it) through the MCP server, the LLM will request if the action is approved. We have more R&D to perform with authentication, but it’s a good sign that data security and governance are being accounted for.

What are the limitations of the Apps SDK?

As this is in its infancy, generating templates to be used within an MCP Server is rudimentary, has very sparse documentation and doesn’t seem to follow any common practices. 9thCO’s point-of-view is this may not be worth the time investment currently, as technical debt will accumulate as this goes through serious iterations.

Debugging is a pain currently. Error messages returned from ChatGPT are vague and resources are limited.

Invoking the application and getting the LLM to interact with it takes… patience. This is all context based, so users need to be quite specific with requests in order to actually interact with your application.

The bottom line is that while this is very promising and exciting, currently there are no real stack standards for this. There are tutorials that use a cloud server, Node or Remix (3), while we chose to use express. In this state, there is a lot of trial and error.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53// Register a tool that the LLM can use to update a todo mcp.registerTool( // Tool name registered in MCP for invoking "update_todo", { title: "Update Todos", description: "Update a todo in Supabase by ID.", // Generated input schema expected from the LLM inputSchema: { id: z.number().int().describe("ID of the todo to update."), title: z.string().optional().describe("New title of the todo."), isComplete: z.boolean().optional().describe("New completion status."), }, // Generated output schema for validation outputSchema: { todos: z.array( z.object({ id: z.union([z.number().int(), z.string()]), title: z.string(), isComplete: z.boolean(), }) ), count: z.number().int().optional(), }, }, // Handler function to process the tool's logic async (args: { id: number; title?: string; isComplete?: boolean }) => { // Extract arguments const id = args?.id; const title = args?.title; const isComplete = args?.isComplete; // Validate required argument if (typeof id !== "number") { throw new Error("ID is required to update a todo."); } // Trigger some database operation with input args provided by the LLM const { data, error } = await supabase .from("todos") .update({ title, isComplete }) .eq("id", args.id); if (error) throw new Error(error.message); const output = { todos: data ?? [] }; // Return the result in the expected format return { content: [{ type: "text", text: JSON.stringify(output, null, 2) }], structuredContent: output, }; } );

Why this matters

While time will tell how the ecosystem evolves, we feel this will become a new form of brand interaction. The true power of MCP and the SDK lies not in UI replacements but in data-driven integrations:

Companies can offer ChatGPT-native versions of their services.

Users can access brand functionality in-context, without switching apps.

Integrated widgets can remember preferences and dynamically present tailored experiences.

The protocol opens new possibilities for automation, personalization, and AI-driven recommendations, using real data.

While the templating (UI) layer is still immature, the data processing and LLM interaction layer is already extremely powerful and ripe for adoption. Brands that adapt early - by building MCP-based widgets and focusing on conversational UX - can transform how users discover, interact with, and remain loyal to their services.

Stay tuned for more learnings!